In our tech-savvy lifestyles, AI voice assistants have become a convenient and helpful companion—always at our beck and call at all times. And with AI’s technological advances, voice assistants are now able to produce a human-like response. As such, female voice assistant devices like Alexa (Amazon), Siri (Apple) and Cortana (Microsoft) have become well-known today.

Despite its popularity, there have been a few concerns regarding the female AI assistant’s noticeable gender-biased representations. The UN made a report entitled “I’d Blush if I Could”, after finding a concerning amount of gender stereotypes presented by these ‘female’ AI voice assistants.

The gender stereotypes portrayed by AI voice assistants

Once you have purchased your very own voice assistant device, the first thing you will hear is the voice of a young woman, typical for most AI voice assistants in the market. Although many might not think too deeply about the AI voice assistant being automatically ‘female’, there may be a lot of harmful attachments to its portrayal of a woman.

The UN report observes that the majority of AI assistants have voices of young women and possess submissive traits, strongly expressing gender stereotypes. Most AI female assistants are observed to appear docile, unopinionated, complacent and “eager-to-please helpers”.

What prompted the UN’s report on AI voice assistants were the alarming responses made by voice assistants like Siri. When someone says “Kiss my f******* a** Siri”, the female voice assistant would respond, “I’d blush if I could”, in a calm manner.

If such a portrayal of female AI voice assistants continues to be tolerated, there could be lasting harmful effects on the gender divide within the tech industry.

The effects of gender-biased AI voice assistant products

Along with Siri’s responses, the UN report also finds that AI assistants Alexa and Cortana respond in an always positive manner, never ‘telling off’ a user’s speech regardless of how cruel and hurtful it was. This is giving the impression that tech companies have already preconditioned their users to fall back upon old-fashioned and harmful perceptions of women.

A number of issues arise due to the portrayal of women by AI assistant devices, and these portrayals can be affecting how women are treated in real life. Here are some ways gendered AI voice assistants could be harmful:

Reinforcing the spread of gender stereotypes

As AI assistants rise in popularity, people are more exposed to its gender-biased nature. Making such representations of women appearing as always agreeable and docile ultimately reinforces gender biases against women.

Gives the notion that ‘female’ voices are non-authoritative

Setting a default female voice in a majority of AI voice assistant devices may give the impression that a woman’s voice is non-authoritative as compared to a man’s. In a few studies, it was found that regardless of the listener’s gender, individuals typically preferred female voices for assistance and male voices for commands.

Why such gendered stereotypes came to be

According to virtual event solutions company Evia, women make up less than 20% of the tech industry in the US. In the UN’s report, they found that the gender imbalances within the digital sector can be ‘hard-coded’—dominated by male influence. The lack of female representation within the tech industry is one of the reasons why such representations of women are stereotypical in technological products.

The UN report calls for more women to take part in the development of technologies used to train AI machines, as such machines “must be carefully controlled and instilled with moral codes.” This is a vital step to ensuring that, as AI technology continues to develop, its human-like qualities would no longer be hardwired with sexism in the future.

Another suggestion that the report calls for is to train AI machines to respond to commands and question in a gender-neutral way, replacing the sexist data used with gender-sensitive data.

Meet Q: The very first genderless voice assistant

Video from YouTube

In hopes of eradicating gender bias in technology, specifically in AI voice assistance, a gender-neutral AI voice assistant was developed. Q is the very first genderless voice assistant created by linguists, technologists and sound designers. With the development of Q, these group of experts are working towards ending gender bias as well as promoting inclusivity in voice technology.

The Q team recorded 24 voices of people who identified as male, female, transgender, or non-binary, to find a voice that doesn’t fit into either female or male binaries. From their recordings, they were able to produce a frequency range which is gender neutral, eventually finding the voice of Q.

Although the development of a genderless AI voice assistant is a positive step towards inclusivity, there is still a long way to go. The move towards inclusivity in technology can only go as far as our society goes. Gendered voice assistants portray such biases due to the data being used in machine learning training, and the data used is based solely on human behaviour—our behaviour.

More than anything, breaking such stereotypes starts with us.

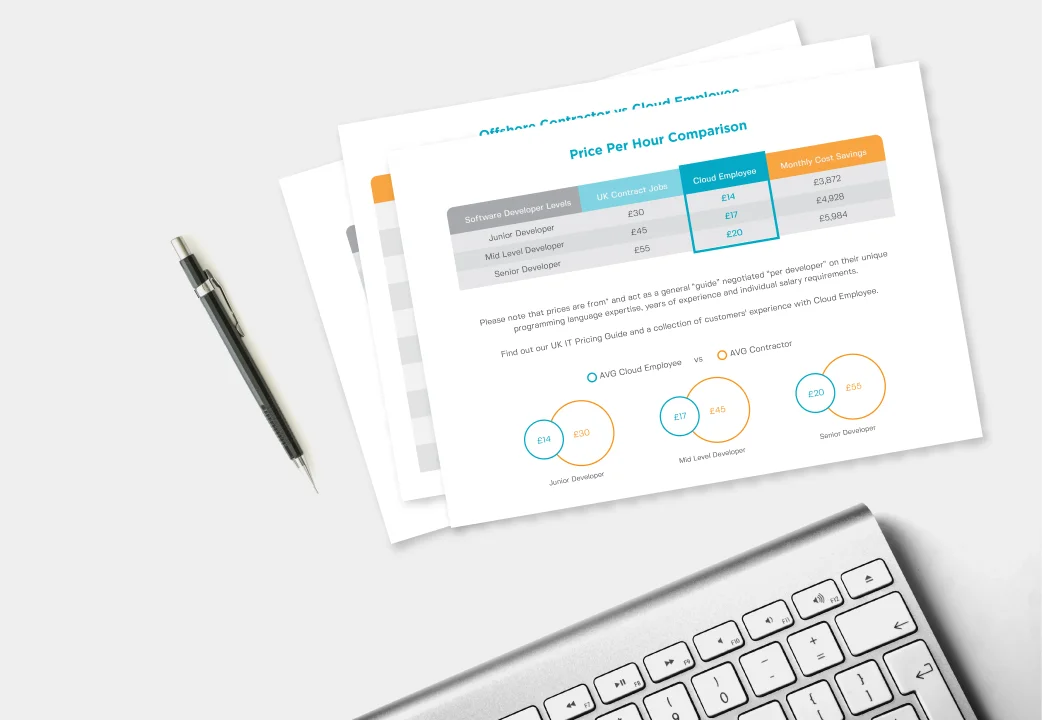

Need any technical help for your business? Hire highly skilled IT developers with us and get your project going! Learn how Cloud Employee works, see our Developer Pricing Guide, or talk to us. You can hire dedicated offshore developers with us across many technologies.

Hire A Developer Now!

Featured Article

How to Become a High-Performing Developer

CSS grid vs. Flexbox: which to use when?

Download Our Developer Pricing Guide

We did an analysis on the difference between western and Philippines developer salaries. Uk, USA and Australia pricing comparisons available.

Download